A Comprehensive Guide to Troubleshooting Network Issues in Ollama and Continue Plugin Setup for Visual Studio Code

- Ctrl Man

- Programming , VS Code

- 23 Sep, 2024

Troubleshooting Network Issues with Ollama Preview on Windows 11

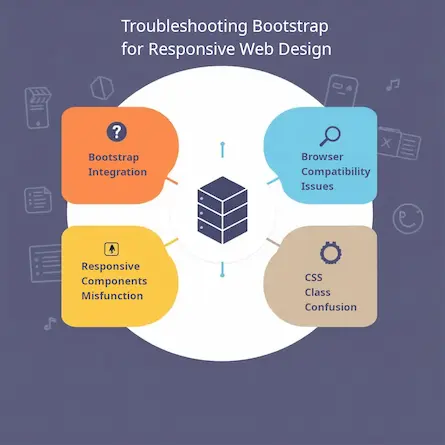

In this guide, we focus on setting up a Retrieval-Augmented Generation (RAG)-like environment in Visual Studio Code (VSC) using Ollama to power the autocomplete feature provided by the Continue plugin. This setup is designed to enhance the development experience by leveraging Ollama’s large language model capabilities for code completion and other advanced features. However, network-related issues can arise, especially when configuring such tools. This guide will help troubleshoot and resolve common problems with Ollama on a Windows 11 system.

Potential Causes

- Automatic Startup: Applications may start automatically at boot, even if they’re not listed in

msconfig. - Port Conflicts: Another process might be using the same port (e.g., 11434) that Ollama requires.

- System Resources: Insufficient memory or processing power could hinder Ollama’s performance.

Step-by-Step Solutions

-

Stop Unwanted Services:

- Open Task Manager (

Ctrl + Shift + Esc), go to the “Services” tab, and stop any unnecessary services, including those related to Ollama. - Alternatively, disable them via the Registry Editor (

regedit) orservices.msc.

- Open Task Manager (

-

Check Port Availability:

-

In Command Prompt, run:

netstat -ano | findstr 11434 -

If a process is using the port, stop it with:

taskkill /PID [process_id] /F

-

-

Disable Unwanted Automatic Startup:

- Use

regeditto disable any Ollama-related services from starting automatically underHKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services.

- Use

-

Manually Start Ollama:

-

Launch Ollama manually by running:

ollama serve

-

-

Ensure Proper Plugin Configuration:

- In VS Code, check

File > Preferences > Settingsand confirm that theContinue.serverURLand other settings are correctly configured for Ollama.

- In VS Code, check

-

Check Logs for Issues:

-

Enable logging when running Ollama:

ollama serve --log-level debug -

Review the logs for any errors that might explain the issue.

-

-

Restart Your System:

- A restart can often resolve configuration or network issues.

Additional Tips

- Update Software: Ensure both Ollama and the Continue plugin are up-to-date.

- Check System Resources: Make sure your system has enough available memory and processing power to handle LLMs.