Building a RAG-Like Assistant with Qwen2 7B

- Ctrl Man

- Technology , AI Development

- 02 Sep, 2024

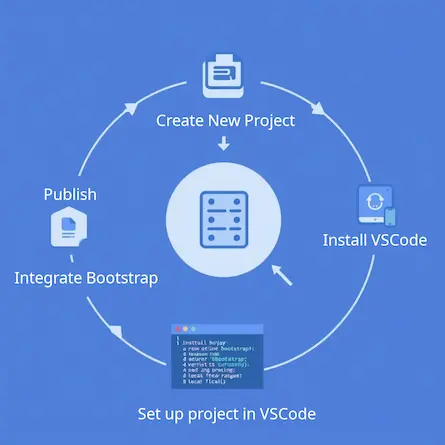

Crafting an RAG-Like Solution with Open-Source LLM Qwen2 7B under Apache License using LM Studio and Continue Plugin for Visual Studio Code

Introduction

Retrieval-Augmented Generation (RAG) solutions are powerful methods for enhancing the capabilities of large language models (LLMs) by integrating external data retrieval into the response generation process. This approach improves the accuracy and relevance of model outputs by grounding them in up-to-date and contextually appropriate information.

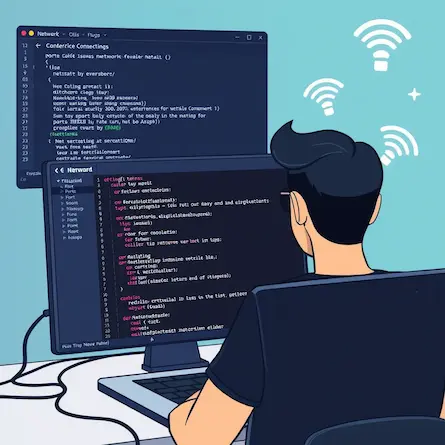

In this guide, we focus on your current setup, which utilizes the open-source Qwen2 7B model running on LM Studio as a local server, combined with the Continue plugin integrated with Visual Studio Code. While this setup works effectively for single-session interactions, enhancing it with RAG-like features can significantly improve the overall user experience by enabling context retention and retrieval-based responses.

What Makes a Solution More or Less RAG-Like?

Retrieval Component

In RAG systems, the retrieval component plays a central role by fetching relevant data from a knowledge base or document store and feeding it to the LLM for response generation. This component ensures that the model’s outputs are grounded in factual information, increasing their accuracy and relevance.

-

Current Setup: Your current configuration, involving Qwen2 7B running locally on LM Studio, does not explicitly include a retrieval mechanism. This means the model generates responses solely based on its internal knowledge and the provided input context.

-

Potential Enhancements: Incorporating a retrieval mechanism could dramatically improve your system. Embedding-based retrieval methods, like those employed by the Continue plugin, can help pull relevant context from your codebase or other data sources. This retrieval step is crucial for providing more accurate, contextually enriched responses that feel tailored to the user’s needs.

Combining Retrieval with Generation

The seamless integration of retrieved data into the generation process is key to creating a truly RAG-like system. By feeding retrieved content directly into the LLM’s input, the model can generate answers that are not only contextually accurate but also grounded in specific, relevant information.

-

Current Setup: Currently, Qwen2 7B generates responses without the benefit of additional retrieved data. While the responses are coherent, they may lack the specificity and grounding that comes from direct integration of external information.

-

Improvement Opportunities: By adopting retrieval components like the Continue plugin’s codebase context providers, you can enhance your setup. This integration allows the LLM to combine retrieved snippets of code or documentation directly into its response, enhancing the overall quality and usefulness of the output.

Context Memory (State Management)

Context memory is a critical aspect of RAG systems, especially for applications that require multi-turn interactions. Effective context management allows the model to maintain an ongoing dialogue, keeping track of previous exchanges to avoid repetition and enhance the coherence of the conversation.

-

Current Setup: Your current system performs well in single-session scenarios but lacks mechanisms for maintaining context across multiple interactions. This limitation can make extended conversations feel disjointed, as the model does not remember previous exchanges.

-

Enhancement Strategies: Integrating a memory mechanism, similar to what’s used in more advanced RAG systems, can improve the flow of multi-turn dialogues. This approach would allow the model to retain context over multiple interactions, making the assistant more responsive and conversationally aware.

Enhancing Your Solution with Codebase Retrieval

Integration of a Retrieval Mechanism

Embedding-based retrieval methods offer a significant improvement over traditional keyword searches by capturing the semantic meaning of data, allowing for more precise and context-aware retrieval. The Continue plugin for Visual Studio Code employs a similar approach by indexing your codebase and making it accessible through embeddings.

-

Using the Continue Plugin: The Continue plugin’s “codebase” and “folder” context providers enable efficient retrieval from your workspace using embeddings calculated locally with

all-MiniLM-L6-v2. This setup allows you to ask high-level questions about your codebase, generate code samples based on existing patterns, and retrieve relevant files or snippets as needed. -

Capabilities and Limitations: The retrieval process enhances the model’s ability to provide contextually relevant code suggestions. However, it is limited to what’s indexed locally and does not yet integrate broader external data sources or real-time information.

Combining Retrieved Information with Model Generation

Integrating retrieved content into the input of Qwen2 7B can dramatically improve the responses’ quality. By feeding relevant snippets or contextual data directly into the LLM’s prompt, the model can generate outputs that are more specific and grounded.

- Implementation: Modify your current setup to allow retrieved data to be passed along with user queries as part of the input context. For instance, when the Continue plugin retrieves relevant code snippets, these can be directly embedded into the prompt given to Qwen2 7B, enhancing the model’s response.

Implementing Context Memory for Multi-Turn Interactions

Maintaining conversation context across multiple interactions is essential for creating a seamless dialogue experience. Implementing a memory mechanism that keeps track of previous exchanges can significantly improve the user’s interaction with the assistant.

-

Current Challenges: Without state management, your setup is constrained to isolated single-turn queries, which limits its ability to provide coherent multi-turn interactions.

-

Enhancement Approach: To overcome this, consider implementing a lightweight context memory that records the last few exchanges and feeds them back into the model. This would enable Qwen2 7B to respond with a greater awareness of the ongoing conversation, making the experience feel more natural and dynamic.

Configuring Your Setup: Example config.json for Windows Users

To configure your system for the best performance and integration, use the following config.json example tailored for Windows users:

{

"models": [

{

"title": "LM Studio",

"provider": "lmstudio",

"model": "llama2-7b"

}

],

"customCommands": [

{

"name": "test",

"prompt": "{{{ input }}}\n\nWrite a comprehensive set of unit tests for the selected code. It should setup, run tests that check for correctness including important edge cases, and teardown. Ensure that the tests are complete and sophisticated. Give the tests just as chat output, don't edit any file.",

"description": "Write unit tests for highlighted code"

}

],

"tabAutocompleteModel": {

"title": "Starcoder2 3b",

"model": "second-state/StarCoder2-3B-GGUF/starcoder2-3b-Q8_0.gguf",

"provider": "lmstudio"

},

"contextProviders": [

{

"name": "code",

"params": {}

},

{

"name": "docs",

"params": {}

},

{

"name": "diff",

"params": {}

},

{

"name": "terminal",

"params": {}

},

{

"name": "problems",

"params": {}

},

{

"name": "folder",

"params": {}

},

{

"name": "codebase",

"params": {}

}

],

"slashCommands": [

{

"name": "edit",

"description": "Edit selected code"

},

{

"name": "comment",

"description": "Write comments for the selected code"

},

{

"name": "share",

"description": "Export the current chat session to markdown"

},

{

"name": "cmd",

"description": "Generate a shell command"

},

{

"name": "commit",

"description": "Generate a git commit message"

}

],

"embeddingsProvider": {

"provider": "transformers.js"

}

}

This configuration file sets up various aspects of your local environment, including model settings, context providers, and custom commands. It is crucial for tailoring your development environment to effectively use the tools and features discussed in this article.

Experimenting with External Data Sources

To further enhance your RAG-like system, explore integrating external data sources such as APIs or web scraping mechanisms. This approach can bring real-time information into the retrieval process, adding an additional layer of dynamism and relevance to the model’s outputs.

- Benefits: By incorporating real-time data, your assistant can provide up-to-date responses that reflect the latest information, making it even more powerful and adaptable.

Conclusion

By enhancing your current Qwen2 7B-based solution with codebase retrieval and context memory, you can evolve it into a more RAG-like system that offers improved accuracy, relevance, and conversational coherence. Your existing setup, combined with the Continue plugin’s retrieval capabilities, provides a strong foundation for building an effective assistant tailored to your development workflow.

Looking ahead, further advancements in open-source LLMs and retrieval technologies will continue to expand the possibilities, making it increasingly feasible to deploy sophisticated, RAG-like solutions on consumer-grade hardware.